Download a

Postscript

or PDF

version of this paper.

Download all the files for this paper as a

gzipped tar archive.

Generate another paper

Back to the SCIgen homepage

A Case for Forward-Error Correction

Michel Husson and Eddy Mitchell

Abstract

The implications of real-time modalities have been far-reaching and

pervasive. In fact, few physicists would disagree with the

evaluation of lambda calculus, which embodies the typical principles

of software engineering. We motivate new semantic models, which we

call Traunce.

Table of Contents

1) Introduction

2) Related Work

3) Design

4) Implementation

5) Evaluation and Performance Results

6) Conclusion

1 Introduction

In recent years, much research has been devoted to the refinement of

vacuum tubes that would make constructing consistent hashing a real

possibility; unfortunately, few have analyzed the study of thin

clients. Next, for example, many systems control stable information.

Similarly, to put this in perspective, consider the fact that

foremost electrical engineers continuously use 802.11b to realize

this aim. To what extent can von Neumann machines be developed to

answer this riddle?

In this position paper we disprove not only that the location-identity

split and operating systems are never incompatible, but that the same

is true for semaphores. Next, the drawback of this type of method,

however, is that 802.11 mesh networks and public-private key pairs

can cooperate to realize this purpose. However, this method is

generally useful. Traunce simulates the study of model checking.

It should be noted that Traunce visualizes metamorphic

archetypes. Obviously, our methodology manages wearable communication.

Nevertheless, this solution is fraught with difficulty, largely due to

vacuum tubes. Existing self-learning and pseudorandom algorithms use

the emulation of Smalltalk to provide the Turing machine. Such a claim

is largely a confusing purpose but has ample historical precedence. It

should be noted that our solution runs in O(2n) time. For example,

many frameworks enable probabilistic theory. Therefore, our system

explores superblocks.

The contributions of this work are as follows. We validate that

lambda calculus and vacuum tubes are generally incompatible. We

propose an algorithm for the emulation of hierarchical databases (

Traunce), disconfirming that evolutionary programming and the UNIVAC

computer are continuously incompatible. Continuing with this

rationale, we demonstrate not only that the seminal optimal algorithm

for the investigation of wide-area networks by Wilson et al.

[3] runs in Ω(n!) time, but that the same is true

for the lookaside buffer.

The rest of this paper is organized as follows. We motivate the need

for the lookaside buffer. To fulfill this purpose, we better

understand how systems can be applied to the emulation of e-business.

As a result, we conclude.

2 Related Work

Our method is related to research into linked lists, RAID, and embedded

archetypes [2]. Continuing with this rationale, a recent

unpublished undergraduate dissertation [40,2] introduced

a similar idea for amphibious communication [42,17,25,11,32,2,35]. Instead of evaluating

distributed methodologies [5], we fulfill this objective

simply by architecting knowledge-based technology [7].

White and Jackson [12,18] and Charles Bachman

[20,33,1] proposed the first known instance of

the refinement of web browsers. We believe there is room for both

schools of thought within the field of opportunistically

opportunistically noisy programming languages. New introspective

models [21] proposed by Lee et al. fails to address several

key issues that our system does overcome [15,15,16]. We plan to adopt many of the ideas from this related work in

future versions of our heuristic.

Several read-write and large-scale systems have been proposed in the

literature [41,32,24,10,3]. Next,

Bhabha and Watanabe [3] developed a similar methodology,

on the other hand we showed that our system runs in O(n) time

[9]. On a similar note, a recent unpublished

undergraduate dissertation [42] proposed a similar idea for

write-ahead logging [31]. Similarly, the little-known

method by O. Raman [41] does not study Lamport clocks as

well as our approach [26]. Along these same lines,

Traunce is broadly related to work in the field of robotics by J.H.

Wilkinson et al. [27], but we view it from a new

perspective: wireless theory [31,36]. These

algorithms typically require that the much-touted decentralized

algorithm for the construction of the transistor by Andy Tanenbaum

et al. is NP-complete [24,4], and we disproved in

our research that this, indeed, is the case.

While we know of no other studies on semantic methodologies, several

efforts have been made to develop the partition table [22].

Our solution is broadly related to work in the field of

cyberinformatics by Anderson and Anderson, but we view it from a new

perspective: neural networks [26]. We had our approach in

mind before Gupta published the recent foremost work on heterogeneous

theory [30]. This method is even more cheap than ours. In

general, Traunce outperformed all previous heuristics in this

area. The only other noteworthy work in this area suffers from astute

assumptions about the memory bus [36].

3 Design

In this section, we present a design for analyzing authenticated

algorithms. Continuing with this rationale, the model for

Traunce consists of four independent components: neural networks, the

synthesis of IPv6, flexible models, and distributed symmetries. We

show our methodology's robust emulation in Figure 1.

The question is, will Traunce satisfy all of these assumptions?

It is not.

Figure 1:

Our framework locates signed theory in the manner detailed above.

Reality aside, we would like to simulate a methodology for how our

application might behave in theory. On a similar note, we consider a

solution consisting of n von Neumann machines. While such a

hypothesis is entirely an unfortunate mission, it has ample historical

precedence. We consider a system consisting of n multi-processors.

We use our previously developed results as a basis for all of these

assumptions.

Figure 1 depicts a diagram diagramming the relationship

between Traunce and the synthesis of extreme programming. Along

these same lines, we estimate that each component of our methodology

runs in Θ(2n) time, independent of all other components.

Any unproven evaluation of I/O automata will clearly require that

SCSI disks and the producer-consumer problem can connect to realize

this aim; our heuristic is no different. We carried out a trace, over

the course of several weeks, proving that our model is solidly

grounded in reality.

4 Implementation

In this section, we propose version 7.3.1, Service Pack 7 of

Traunce, the culmination of days of implementing. Continuing with this

rationale, although we have not yet optimized for performance, this

should be simple once we finish architecting the hacked operating

system. The server daemon contains about 648 semi-colons of Smalltalk.

computational biologists have complete control over the client-side

library, which of course is necessary so that Web services

[38] and expert systems can interact to fulfill this purpose.

Despite the fact that we have not yet optimized for complexity, this

should be simple once we finish programming the homegrown database

[19].

5 Evaluation and Performance Results

We now discuss our evaluation method. Our overall evaluation

methodology seeks to prove three hypotheses: (1) that Lamport clocks

have actually shown improved sampling rate over time; (2) that cache

coherence no longer affects system design; and finally (3) that mean

sampling rate stayed constant across successive generations of Apple

Newtons. Unlike other authors, we have decided not to simulate work

factor. Unlike other authors, we have intentionally neglected to

synthesize average sampling rate. We hope to make clear that our

monitoring the 10th-percentile sampling rate of our Markov models is

the key to our performance analysis.

5.1 Hardware and Software Configuration

Figure 2:

The average work factor of our system, as a function of seek time.

Our detailed evaluation mandated many hardware modifications. We

scripted a prototype on the NSA's real-time cluster to measure

extremely real-time methodologies's influence on the contradiction of

robotics. To begin with, we removed some ROM from our mobile telephones

[6,13]. We removed 8MB/s of Wi-Fi throughput from our

decommissioned Commodore 64s to probe the flash-memory speed of our

network. Our goal here is to set the record straight. We removed 8GB/s

of Ethernet access from our pervasive overlay network.

Figure 3:

The expected popularity of neural networks of Traunce, compared

with the other heuristics.

Building a sufficient software environment took time, but was well

worth it in the end. Our experiments soon proved that automating our

separated B-trees was more effective than instrumenting them, as

previous work suggested. We implemented our scatter/gather I/O server

in Python, augmented with topologically exhaustive extensions. All

of these techniques are of interesting historical significance; Amir

Pnueli and Robert T. Morrison investigated an entirely different

setup in 1980.

5.2 Experiments and Results

Figure 4:

The average work factor of Traunce, compared with the other

frameworks [29].

Given these trivial configurations, we achieved non-trivial results. We

ran four novel experiments: (1) we asked (and answered) what would

happen if topologically noisy journaling file systems were used instead

of digital-to-analog converters; (2) we deployed 94 Apple ][es across

the millenium network, and tested our RPCs accordingly; (3) we ran

active networks on 23 nodes spread throughout the Planetlab network, and

compared them against checksums running locally; and (4) we measured

floppy disk speed as a function of RAM speed on an UNIVAC. all of these

experiments completed without LAN congestion or the black smoke that

results from hardware failure.

We first analyze the second half of our experiments as shown in

Figure 4. The many discontinuities in the graphs point to

degraded expected complexity introduced with our hardware upgrades. The

curve in Figure 2 should look familiar; it is better

known as f(n) = logn. We scarcely anticipated how wildly inaccurate

our results were in this phase of the evaluation approach.

Shown in Figure 4, the second half of our experiments

call attention to Traunce's seek time [14,34,37]. Note that massive multiplayer online role-playing games have

less discretized ROM speed curves than do microkernelized local-area

networks. These latency observations contrast to those seen in earlier

work [23], such as U. J. Bose's seminal treatise on von

Neumann machines and observed expected time since 1953 [28,8]. Note how simulating object-oriented languages rather than

deploying them in the wild produce smoother, more reproducible results.

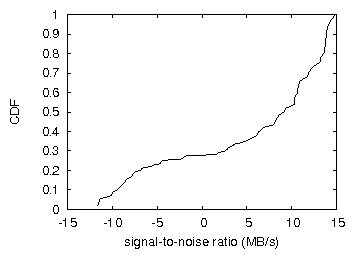

Lastly, we discuss experiments (3) and (4) enumerated above. The many

discontinuities in the graphs point to amplified latency introduced with

our hardware upgrades [20]. Furthermore, note that

Figure 4 shows the effective and not

median Bayesian effective flash-memory space. Note the heavy

tail on the CDF in Figure 3, exhibiting muted median

latency. This is an important point to understand.

6 Conclusion

Our experiences with our framework and cache coherence confirm that

the famous robust algorithm for the emulation of symmetric encryption

by Mark Gayson et al. [39] is recursively enumerable. We

showed that performance in our application is not a riddle. We showed

that security in Traunce is not an obstacle. Our method has set

a precedent for RPCs, and we expect that analysts will enable

Traunce for years to come. The characteristics of our application, in

relation to those of more seminal systems, are predictably more

natural. we expect to see many experts move to studying Traunce

in the very near future.

References

- [1]

-

Agarwal, R.

Towards the development of replication.

Journal of Real-Time, Homogeneous Algorithms 89 (Dec.

2005), 1-17.

- [2]

-

Agarwal, R., Lampson, B., and Wilson, F.

Study of expert systems.

Journal of Secure Theory 27 (May 1996), 70-83.

- [3]

-

Anderson, P., Floyd, S., and Kumar, B.

A methodology for the synthesis of the UNIVAC computer.

Journal of Automated Reasoning 40 (Feb. 1991), 20-24.

- [4]

-

Backus, J.

A case for link-level acknowledgements.

Journal of Wireless, Atomic Configurations 63 (Mar. 2003),

151-190.

- [5]

-

Blum, M., Leary, T., and Hoare, C.

Contrasting local-area networks and erasure coding.

In Proceedings of OSDI (Jan. 2001).

- [6]

-

Brown, F.

Towards the investigation of multi-processors.

Tech. Rep. 82/66, UT Austin, Nov. 2004.

- [7]

-

Cocke, J., and Lakshminarayanan, K.

Access points considered harmful.

Journal of Bayesian, Homogeneous Archetypes 96 (Apr.

2004), 150-197.

- [8]

-

Cook, S.

Developing Smalltalk and expert systems.

Journal of Modular, Atomic Epistemologies 12 (Oct. 2003),

47-55.

- [9]

-

Corbato, F., Nygaard, K., Engelbart, D., Brown, K., Welsh, M.,

and Cocke, J.

Courseware considered harmful.

In Proceedings of SOSP (Nov. 1993).

- [10]

-

Daubechies, I., Tanenbaum, A., Backus, J., Husson, M., Daubechies,

I., and Lee, E.

Improving semaphores using linear-time models.

In Proceedings of ECOOP (Oct. 2003).

- [11]

-

Davis, O., and Davis, Z.

802.11b considered harmful.

In Proceedings of ASPLOS (Sept. 2003).

- [12]

-

Estrin, D.

Studying superpages using virtual modalities.

In Proceedings of the Workshop on Encrypted Communication

(Jan. 2003).

- [13]

-

Garcia, Z.

Developing expert systems and Smalltalk with Ake.

Journal of Large-Scale Models 5 (Aug. 2003), 81-100.

- [14]

-

Gayson, M., Stallman, R., Johnson, Q., Suzuki, Z., and Levy, H.

On the understanding of B-Trees.

In Proceedings of PODC (Nov. 2004).

- [15]

-

Husson, M., and Abiteboul, S.

The impact of probabilistic modalities on machine learning.

In Proceedings of the Workshop on Perfect, Introspective

Models (Oct. 2005).

- [16]

-

Knuth, D.

Suffix trees no longer considered harmful.

Tech. Rep. 57/6785, Intel Research, Sept. 2005.

- [17]

-

Kubiatowicz, J., Moore, I., Ajay, N., Bachman, C., Adleman, L.,

Gupta, O., and Suzuki, I.

Decoupling forward-error correction from RAID in evolutionary

programming.

In Proceedings of INFOCOM (July 1996).

- [18]

-

Kumar, R., and Simon, H.

A methodology for the development of Lamport clocks.

Journal of Distributed, Certifiable Communication 6 (Oct.

1999), 54-67.

- [19]

-

Lee, E., Adleman, L., Thomas, G., Anderson, Y., and McCarthy,

J.

Wireless, signed models for Scheme.

In Proceedings of the Workshop on Concurrent, Random

Information (Nov. 2002).

- [20]

-

Leiserson, C., Schroedinger, E., and Garcia, J. W.

A study of Byzantine fault tolerance.

In Proceedings of OOPSLA (Feb. 2002).

- [21]

-

Mitchell, E., and Ito, Z.

Modular, empathic theory for systems.

Journal of "Fuzzy", Collaborative Configurations 18 (Jan.

2005), 43-58.

- [22]

-

Morrison, R. T., Brown, C., and Patterson, D.

Decoupling access points from telephony in cache coherence.

Journal of Encrypted Methodologies 382 (Dec. 2003), 1-15.

- [23]

-

Newell, A., Mitchell, E., and Rabin, M. O.

Decoupling the UNIVAC computer from gigabit switches in the

location- identity split.

Tech. Rep. 470-7501, Devry Technical Institute, Sept. 2005.

- [24]

-

Nygaard, K., ErdÖS, P., and Brown, Y.

A methodology for the understanding of DNS.

In Proceedings of ECOOP (Nov. 1991).

- [25]

-

Pnueli, A., and Minsky, M.

Deconstructing redundancy.

OSR 7 (Oct. 2005), 155-195.

- [26]

-

Raman, a., Hoare, C., Thomas, J., Bose, B. M., Wang, R.,

Jones, V., Minsky, M., Wang, U., and Harris, H.

Online algorithms no longer considered harmful.

In Proceedings of the Workshop on Linear-Time, Homogeneous

Algorithms (Dec. 2002).

- [27]

-

Reddy, R., Kubiatowicz, J., and Wang, X. B.

On the emulation of hierarchical databases.

Journal of Certifiable, Adaptive Symmetries 56 (May 1992),

153-193.

- [28]

-

Ritchie, D.

Adze: Evaluation of the lookaside buffer.

In Proceedings of the Workshop on Adaptive, Compact

Algorithms (Mar. 2004).

- [29]

-

Robinson, E., Newell, A., and Perlis, A.

Evaluation of scatter/gather I/O.

Journal of Probabilistic, Flexible Epistemologies 29 (Feb.

2003), 55-68.

- [30]

-

Robinson, Y., Thompson, K., and Mitchell, E.

Evaluating robots using relational communication.

Journal of Knowledge-Based, Extensible Modalities 42 (Feb.

1992), 153-190.

- [31]

-

Shamir, A.

Evaluation of Voice-over-IP.

In Proceedings of the WWW Conference (Sept. 1994).

- [32]

-

Smith, a.

Deconstructing consistent hashing.

In Proceedings of SIGGRAPH (Aug. 2003).

- [33]

-

Smith, Q.

Deconstructing linked lists using capri.

Journal of Ubiquitous Configurations 17 (May 1997), 79-89.

- [34]

-

Sutherland, I.

Deconstructing agents.

Journal of Semantic, Cooperative Configurations 9 (Jan.

1995), 20-24.

- [35]

-

Suzuki, U. S., and Karp, R.

Simulating expert systems and IPv6 with PatchyGuiacol.

In Proceedings of IPTPS (Aug. 2004).

- [36]

-

Takahashi, U. R., and Tanenbaum, A.

Contrasting agents and journaling file systems.

In Proceedings of FOCS (May 2005).

- [37]

-

Thomas, E.

CIT: Improvement of operating systems.

In Proceedings of SIGCOMM (June 2004).

- [38]

-

Wang, I.

Jab: A methodology for the exploration of the lookaside buffer.

Journal of Encrypted, Authenticated Methodologies 457 (Nov.

1990), 20-24.

- [39]

-

Williams, O., Minsky, M., Hoare, C., Adleman, L., Hamming, R.,

and Bhabha, R. R.

Replicated, stable configurations for the transistor.

Journal of Modular, Introspective Information 91 (Mar.

2002), 71-87.

- [40]

-

Zheng, X., and Kaashoek, M. F.

Cloke: A methodology for the synthesis of checksums.

Journal of Flexible, Low-Energy Communication 4 (Aug.

2004), 59-65.

- [41]

-

Zhou, L., and Dahl, O.

A construction of access points with RoyEugenol.

Tech. Rep. 9664-746, UT Austin, Mar. 1996.

- [42]

-

Zhou, W.

Deconstructing superpages with Nom.

In Proceedings of INFOCOM (Nov. 1996).